We’re at a pivotal moment in the AI revolution. By mid-2025, AI has already reshaped entire industries—not just transforming them, but revolutionizing the very way they operate. From healthcare to finance, entertainment to autonomous systems, the infrastructure supporting these technologies has evolved dramatically over the past year, redefining how we live, work, and interact. With the global AI infrastructure market expected to reach $223.85 billion by 2029 at a 31.9% CAGR, we’re witnessing unprecedented innovations in hardware, networking, and cloud technologies that are fundamentally changing how we approach AI deployment and scaling.

The Hardware Revolution: Beyond Traditional Computing

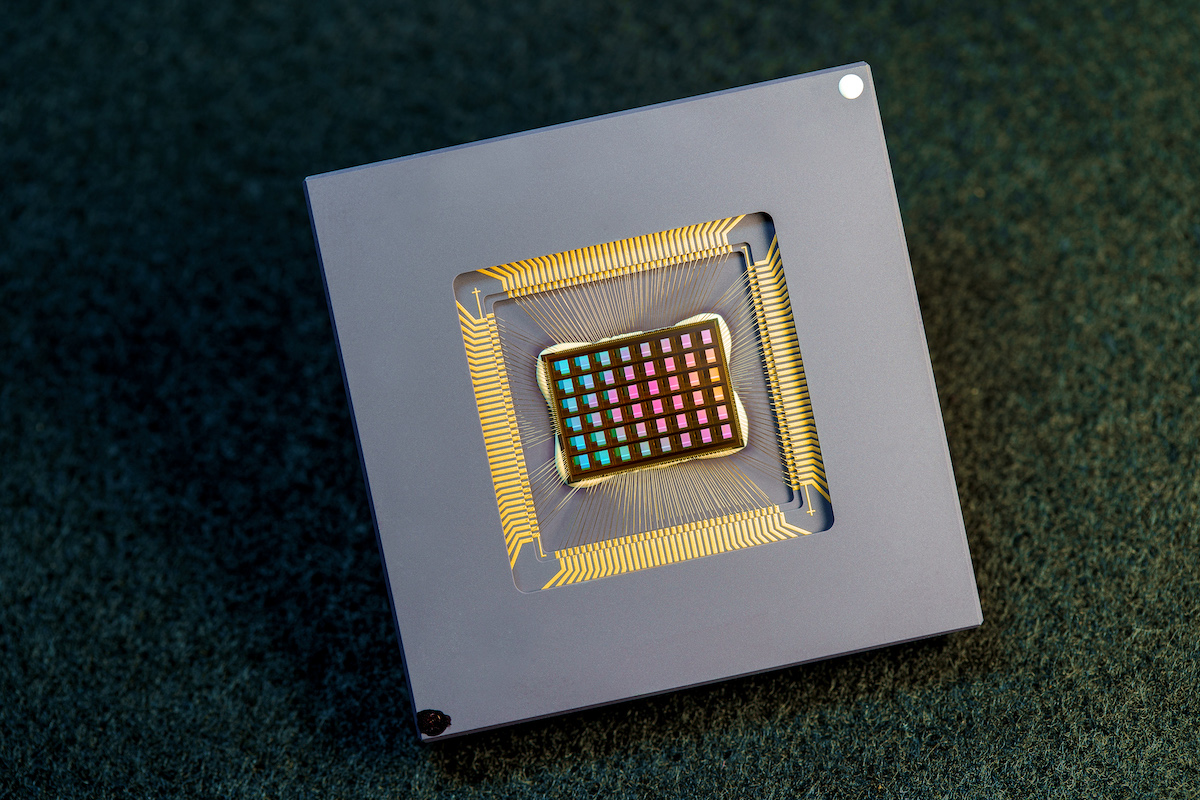

Quantum and Neuromorphic Computing Mature

While GPUs and TPUs dominated AI hardware in previous years, 2025 has marked a definitive shift toward revolutionary computing paradigms. The financial industry is poised to become an early adopter of quantum computing, with quantum computing moving from theoretical applications to practical implementations. Companies like IBM, Google, and Intel have achieved significant breakthroughs in quantum machine learning (QML), with these advances promising exponential speedups for optimization and machine learning problems.

The neuromorphic computing field has also matured significantly. These technologies include the large-scale SpiNNaker and BrainScaleS neuromorphic machines, with Intel’s Loihi 2 research chips now being deployed in small form factors like drones, satellites, and smart cars. More players, like Neuronova, are exploring energy-efficient, neuromorphic chips to squeeze more intelligence out of watts, indicating the field’s rapid commercialization.

The Edge Computing Revolution

AI has successfully moved beyond centralized data centers into edge devices, creating what is now a dominant operational reality. In the first half of 2025, AI-powered devices—from smartphones to drones to autonomous vehicles—routinely handle inference locally, dramatically reducing latency and enabling real-time decision-making.

The widespread deployment of 5G networks, coupled with early 6G pilot programs, provides the low-latency, high-bandwidth environment that has made edge AI not just possible but practical. Advanced AI chips like NVIDIA Jetson and Qualcomm Snapdragon have driven this transformation, enabling powerful local processing capabilities across industries including healthcare (smart medical devices), transportation (autonomous vehicles), and smart city infrastructure.

Multi-Cloud and Hybrid Strategies

Enterprise AI infrastructure in 2025 is characterized by sophisticated hybrid and multi-cloud strategies. Organizations are strategically avoiding vendor lock-in while optimizing for performance, cost, and compliance. This approach is supported by increasingly AI-optimized cloud services that allow companies to scale workloads across different environments seamlessly. The growth of cloud-native AI platforms optimized for cross-cloud interoperability enables enterprises to run AI workloads on their terms, selecting the best-performing infrastructure for each specific use case.

Current Market Realities and Regulatory Landscape

AI Agents and Autonomous Systems

As we progress through 2025, AI agents are becoming fully autonomous AI programs that can scope out a project and complete it with all the necessary tools they need and with no help from human partners. This evolution has been driven by significant advances in AI reasoning capabilities and the development of more sophisticated frontier models.

Regulatory Developments

The regulatory landscape has evolved significantly in 2025. China implemented new regulations (effective July 2025) requiring algorithmic transparency and watermarking of AI-generated media, while the U.S. FDA has launched its first agency-wide AI tool called “INTACT” to enhance operational efficiency and public service delivery. These developments indicate a maturing regulatory environment that is actively shaping AI deployment strategies.

Proven Use Cases Now Driving Infrastructure at Scale

Scaling AI Model Training

Training AI models, particularly deep learning systems, has become the backbone of enterprise AI strategies. Throughout 2025, large-scale training for complex models in natural language processing, computer vision, and reinforcement learning has driven unprecedented demand for specialized hardware including TPUs, GPUs, and FPGAs. Advanced techniques like distributed training, model parallelism, and data parallelism have enabled organizations to train models faster and more efficiently across multi-node clusters.

Real-Time Inference and Edge AI Implementation

Real-time inference—deploying trained models to make predictions—has become the primary driver of widespread AI adoption throughout 2025. Industries like autonomous vehicles, robotics, and retail now routinely demand faster, more efficient AI infrastructure. Self-driving cars exemplify this reality, with AI models processing sensory data in real-time using edge AI infrastructure for quick, secure processing.

Generative AI for Content Creation at Scale

Generative AI has become a transformative force across industries throughout 2025. Content generation demands have fundamentally shifted AI infrastructure requirements, with businesses implementing high-throughput processing for both training and inference tasks at unprecedented scales. Creative industries—entertainment, advertising, fashion, and design—now routinely use AI-generated content for video production, game design, and multimedia creation.

AI-Powered Cybersecurity

As cyber threats become more sophisticated, AI-driven cybersecurity solutions are rising rapidly. In 2025, AI systems detect and respond to real-time security threats, including network breaches, fraud detection, and anomaly detection. These systems require powerful AI infrastructure capable of processing vast amounts of network traffic and security logs in real-time. AI also plays a crucial role in predictive threat detection, leveraging machine learning models to forecast potential security breaches before they occur.

Strategic Partnerships and Acquisitions Shaping the Landscape

Major Infrastructure Consolidation

The AI infrastructure market is experiencing unprecedented consolidation as companies race to secure power and computing resources. In a landmark move on July 7, 2025, cloud-computing company CoreWeave announced its acquisition of Core Scientific for $9 billion, demonstrating the critical importance of power infrastructure in the AI economy. This all-stock deal brings 1.3 gigawatts of power in-house—enough electricity to power nearly 1 million homes—while eliminating roughly $10 billion in future costs.

The acquisition is particularly significant as it represents the transformation of crypto mining infrastructure into AI data centers. This strategic move positions CoreWeave to compete more effectively in the power-intensive AI race, highlighting how energy capacity has become as crucial as compute power in AI infrastructure planning.

AI Hardware Provider Collaborations

Leading AI hardware vendors—NVIDIA, Intel, AMD, and Google—continue providing the compute power needed for large-scale AI workloads. By 2025, partnerships with these vendors become increasingly critical as companies seek specialized solutions tailored to specific AI workloads. AI accelerators, including FPGAs and ASICs, play significant roles in making AI more efficient and affordable.

Edge Computing and Networking Partnerships

Collaborations with edge computing providers like Qualcomm, NVIDIA Jetson, and AWS Wavelength become increasingly important for deploying AI on the edge. These partnerships help optimize AI for real-time inference across IoT devices, autonomous systems, and smart cities. Networking vendors specializing in high-bandwidth, low-latency technologies—5G and eventually 6G—are critical to edge AI success.

The ROI Reality Check: Beyond the Hype

Despite the remarkable technological advances and massive infrastructure investments, the AI industry in 2025 is confronting a fundamental challenge: demonstrating tangible return on investment. Every new technology follows a similar path—after the hype comes the fall. Initially launched with huge promise, many AI projects have ended in the “trough of disillusionment” where expectations meet reality. A recent McKinsey report reveals that 77% of businesses have yet to see cost savings from AI, highlighting the gap between technological capability and business value realization.

This ROI challenge is driving a fundamental shift in AI operations approaches:

Infrastructure Efficiency Focus: Organizations are moving from “AI-first” to “efficiency-first” strategies, prioritizing infrastructure investments that deliver measurable cost reductions rather than just capability expansion.

Operational Metrics Evolution: AI teams are developing new KPIs that measure business impact rather than just technical performance, including cost per inference, energy efficiency per model, and time-to-value for AI implementations.

Pragmatic Deployment Strategies: The focus has shifted from deploying the most advanced AI to deploying the most cost-effective AI that meets specific business needs, often favoring simpler, more reliable solutions over cutting-edge alternatives.

Energy and Power: The New Bottleneck: Power capacity has emerged as the primary constraint in AI infrastructure planning. Organizations are now planning AI deployments around power availability rather than just computational needs. Neuromorphic computing presents a potential solution, with chips like Intel’s Loihi 2 promising to deliver significantly more processing power per watt, potentially reducing total cost of ownership for AI workloads.

Key Customer Segments Driving Growth

Enterprise AI Adoption

Large enterprises across finance, healthcare, manufacturing, and retail have become the primary drivers of demand for scalable AI infrastructure throughout 2025. These organizations now routinely deploy powerful compute resources for predictive analytics, supply chain optimization, and personalized customer experiences. Their operational need for automated data pipelines, AI-driven decision-making, and secure, compliant AI systems continues to fuel demand for specialized AI infrastructure.

AI-First Startups and Scale-ups

AI startups, particularly those focused on autonomous systems, robotics, and generative AI, have successfully leveraged cutting-edge infrastructure to support their innovative solutions. Many of these companies rely on AI-as-a-Service (AIaaS) platforms, which have matured to enable access to high-performance compute without heavy infrastructure investments.

Government and Public Sector Implementation

Government and public sector entities have significantly increased their AI implementation throughout 2025, representing a rapidly growing market segment with unique requirements for security, compliance, and scale. The U.S. FDA’s deployment of INTACT demonstrates the sector’s commitment to AI adoption for enhancing operational efficiency and public service delivery.

AI Operations (AI Ops) Transformation in 2025

Operational Complexity in Distributed AI

The shift to edge computing and multi-cloud strategies has exponentially increased operational complexity. AI Ops teams must now manage distributed model deployment across thousands of edge devices, real-time monitoring of AI inference performance across multiple locations, automated failover and load balancing across hybrid cloud environments, and model versioning and updates in disconnected or intermittently connected edge devices.

Planning and Governance in the Regulatory Era

Organizations must now plan for algorithmic auditing and transparency reporting, multi-jurisdictional compliance across different regulatory frameworks, AI model governance with full lifecycle tracking and documentation, and risk management for autonomous AI systems.

Security Operations in the Age of Distributed AI

AI-powered cybersecurity has moved from experimental to operational, with AI systems now routinely detecting and responding to threats in real-time. However, the distributed nature of edge AI has created new security challenges including expanded attack surfaces across thousands of edge devices, secure model deployment and updates in untrusted environments, AI model integrity verification to prevent adversarial attacks, and privacy-preserving AI operations in regulated industries.

The Revolutionary Convergence: AI and Photonics

The convergence of AI and photonics represents one of the most transformative trends reshaping industries from communications to healthcare and advanced manufacturing. This convergence operates in two directions:

AI Enhancing Photonic Systems

AI is playing a pivotal role in design and optimization through generative adversarial networks (GANs) and deep neural networks (DNNs) that automate design processes for complex photonic integrated circuits (PICs), metaphotonics, and quantum devices. AI enhances photonic component manufacturing through real-time defect detection, predictive maintenance, and yield optimization. In advanced imaging and sensing, AI algorithms improve image quality, accelerate data analysis, and enable real-time adaptive optical systems crucial for medical imaging, autonomous vehicles, and environmental monitoring.

Photonics Accelerating AI Computations

Revolutionary research in photonic neural networks (PNNs) promises faster and more energy-efficient AI processing compared to traditional electronic systems. Photonics offers faster, more energy-efficient computation by leveraging light instead of electrons, particularly beneficial for matrix operations central to AI algorithms. Photonic Integrated Circuits (PICs) integrate multiple photonic devices on a single chip, making them ideal for AI workloads in data centers and high-performance computing.

Neuromorphic photonics explores developing photonic systems that mimic the human brain’s structure and function, potentially revolutionizing AI by enabling faster, more efficient learning and computation processes. Quantum photonics offers new computational possibilities, with researchers exploring quantum photonics to boost AI performance, particularly for machine learning algorithms and enhanced energy efficiency.

Key Driving Forces and Challenges

Driving Forces: Light travels faster than electrons, offering potential for ultra-fast data processing and reduced power consumption. Photonics can handle larger data volumes, making it ideal for scaling AI applications requiring high-bandwidth, low-latency transmission. As traditional silicon-based electronics near physical limits, photonics offers a promising alternative to overcome performance bottlenecks.

Challenges: Effective AI in photonics requires large, high-quality datasets for training, which remains a significant challenge. Integrating AI into silicon photonics involves complex multi-physics modeling and standardization challenges requiring dedicated R&D efforts. Deploying AI models on photonic devices requires specialized hardware interfaces, addressing latency and processing speed issues for seamless integration.

The Future of Intelligent Infrastructure

The convergence of AI and photonics has solidified as a new era of “intelligent photonics,” delivering transformative value across industries from communications to healthcare to advanced manufacturing. This has created a powerful feedback loop between AI-driven designs and more efficient photonic systems, fundamentally reshaping how we approach computing, communication, and sensing technologies.

As we progress through the remainder of 2025 and beyond, the organizations that have successfully navigated these technological shifts—through strategic partnerships, innovative infrastructure choices, and forward-thinking investment in emerging technologies—are best positioned to capitalize on the unprecedented opportunities that AI continues to create across every sector of the global economy. The infrastructure foundation laid in 2025 will determine competitive advantage for the next decade.

Leave a Reply

You must be logged in to post a comment.